We have a feature in eramba that every hours connects to our support server and “pulls” a JSON file with “news” (stuff we want you to know). We never used the feature since we never publish anything but we did notice that our backend servers (were you connect every hour) are overloaded and most likely dropping connections.

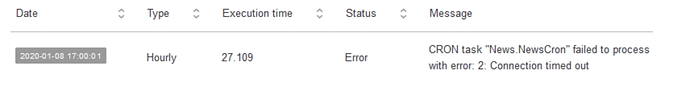

For that reason, your hourly cron might sometimes give you an “Error”. Is not a blocking error, cron will still do what is supposed to do, but the “News” task of the cron will fail. This release removes that API request so you dont get the “Error”.

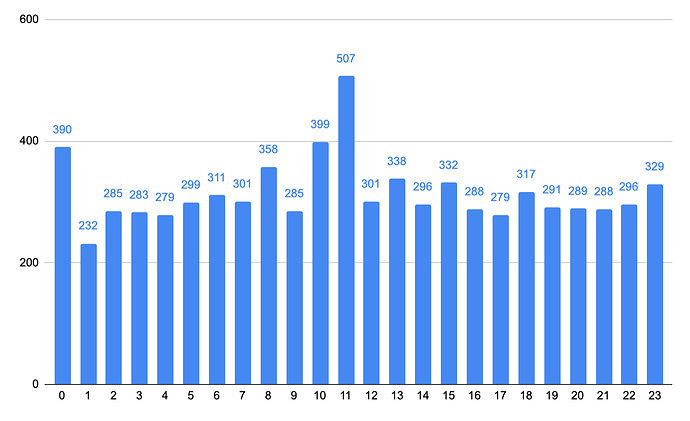

We will need to re-architect our backend servers to something that scales, we noticed that beyond 300-400 simultaneous connections we start queueing requests and eventually some will drop. The graph below shows number of simultaneous connections for every hour (uk time zone).

Is known to everyone we are not linux specialists … so we’ll need to investigate how to make this work on a more scalable way. This is when we ask a friend of a friend of a friend for an advice…